Machine Consciousness Tech Demo

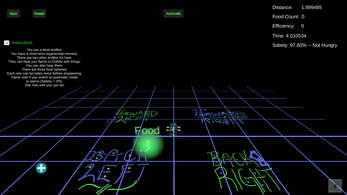

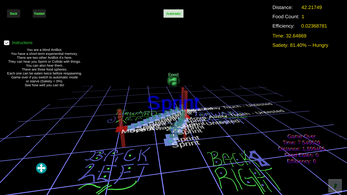

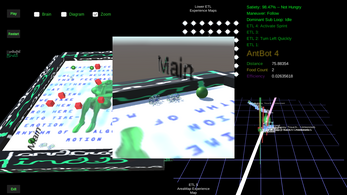

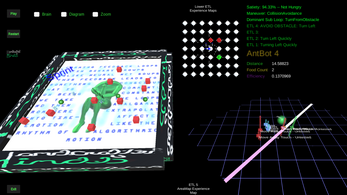

The ant can only smell food (poorly) if it's close enough and sense collisions (touch). The idea is that experiences are a reaction to the environment and larger and more complex experiences are strange loop combos/mixtures of smaller reactions. We build up to a map of our surroundings based on the relative distance of experiences. This base forms a map that does not require recognition or higher forms of predetermined information that can then be used later to correlate to recognized information. This bedrock of experiences allow the AntBot to remember the locations of things but in terms of itself rather than in terms of an interpretation of what things are. I believe that this is the most basic kind of map necessary for proper sensor fusion. We should increase hierarchies of experience maps until we eventually find ourselves at a map that resembles the ETL 5 Area Map. From here, we can add neural net recognition or other recognition information to further specify the behavior of our autonomous bots. For gaming, we can add a reactive fluidity to GOAP/HTN systems' actions and to enable reflexive animation.

| Status | Released |

| Category | Other |

| Platforms | HTML5 |

| Author | HandcraftedMinds |

| Made with | Unity |

| Tags | Unity |

| Average session | A few minutes |

| Links | Homepage |

Leave a comment

Log in with itch.io to leave a comment.